An AI-IDE for Kubernetes

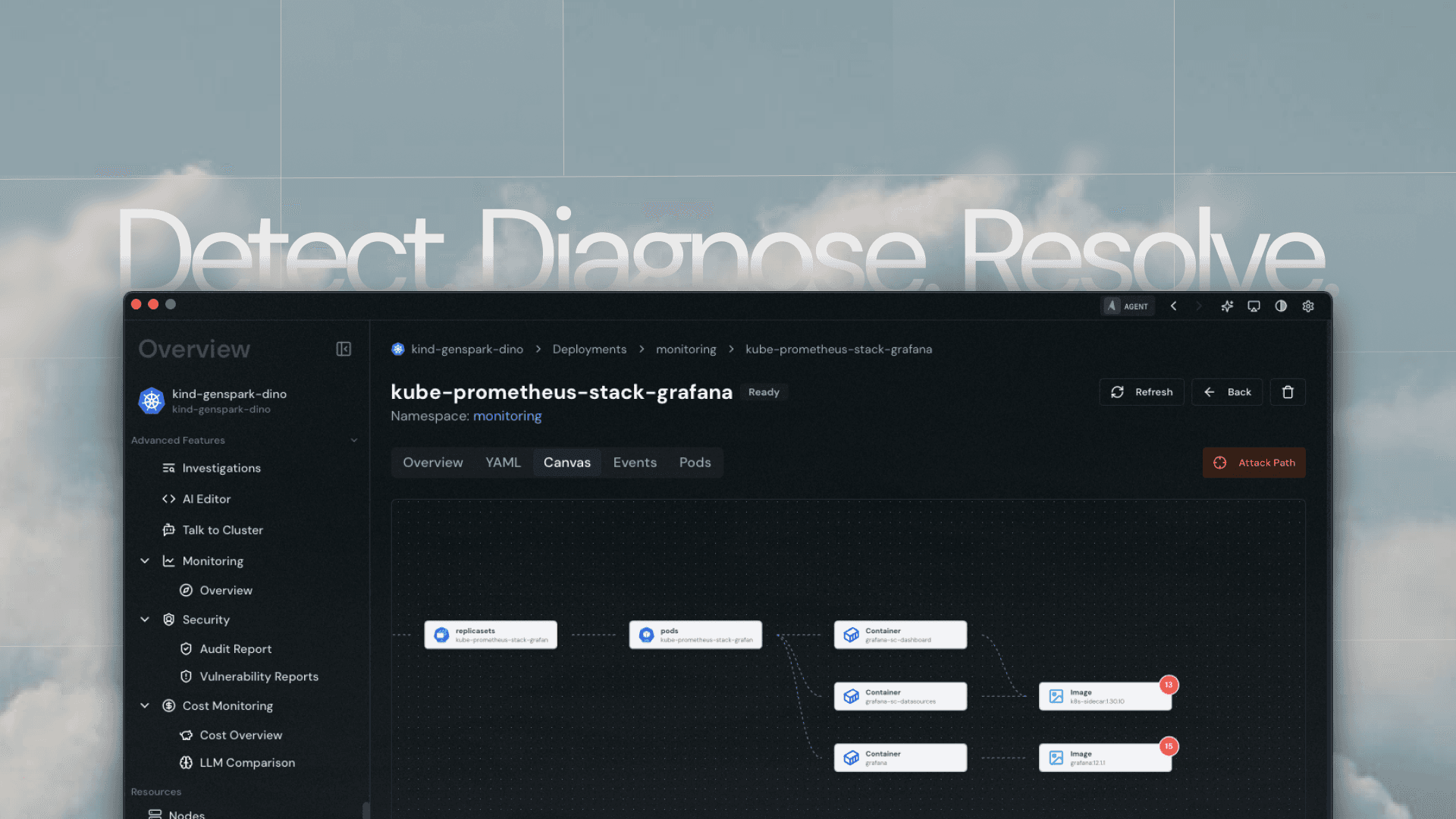

Agentkube is a desktop-based Kubernetes IDE designed to reduce operational friction and incident resolution time by combining local cluster access, structured Kubernetes context, and bounded AI agents.

The core idea behind Agentkube is that most Kubernetes failures are not caused by lack of tooling, but by context fragmentation - metrics live in one place, logs in another, YAML elsewhere, and human reasoning happens outside the system. Agentkube brings these signals together inside a local, trusted control plane and augments them with AI agents that assist in diagnostics, remediation, and cluster understanding.

Problem

Kubernetes tooling today suffers from three systemic problems:

Context switching overload Engineers jump between dashboards, logs, metrics, traces, docs, and Slack during incidents. Each tool has partial context, none has the full picture. Tryting to find the correlation between metrics and logs.

Most Web-first tools break trust boundaries, these modern platforms require uploading kubeconfigs, metrics, or logs to remote servers — unacceptable in many production or regulated environments. Where as the AI tools lack grounding and safety, Existing AI-powered Kubernetes tools often operate on partial data, hallucinate fixes, or lack deterministic boundaries, making them risky during incidents.

From an engineering standpoint, the core problem is not missing data, but missing structure and right context.

Why This Problem Matters

Kubernetes is now mission-critical infrastructure, but teams still operate it through fragmented tools that force engineers to manually stitch together metrics, logs, configs, and alerts under pressure. This context fragmentation - not a lack of tooling - is what drives long MTTRs, alert fatigue, burnout, and real business impact during incidents. As systems scale, this problem only gets worse, and unbounded AI adds risk by guessing without full context. This problem matters because production infrastructure must be operable at human scale: teams need clarity, trust, and grounded intelligence that works within security boundaries, not more dashboards or black-box automation.

Solution

Agentkube solves the problem by bringing all Kubernetes operational context into a single, trusted AI IDE instead of scattering it across dashboards and tools. It unifies logs, metrics, events, configs, cost, and security signals into one continuous workflow, so engineers don’t have to reconstruct context during incidents. Its AI is safety-first and bounded: agents assist with investigation, correlation, and root-cause analysis, but never act autonomously or outside user-defined limits. By running locally alongside the cluster and keeping data within trust boundaries

User Journey

User provides a failing resource or alert (e.g., a crashing pod, deployment error, or alert) → Agentkube uses that evidence as the investigation entry point → it plans what context is required based on the resource (related pods, services, configs, recent changes, metrics, logs, events) → using custom toolings or with help of Model Context Protocol (MCP), it fetches the required traces, metrics, and signals existing o11y systems like LGTM Stack, SigNoz, Datadog, or New Relic → signals are correlated into a single structured view → agents reason over this bounded context to identify the root cause → Agentkube plans a safe remediation path with explicit tool calls → execution begins with human-in-the-loop approval for every action → all actions run within strict safety boundaries → outcomes are validated and surfaced back to the user with full transparency and control.

It was built as a zero-deployment solution to avoid adding cost and overhead inside clusters. Every in-cluster component consumes resources, so Agentkube runs entirely outside the infrastructure—one agent managing multiple clusters with no extra compute or memory cost.”

Tech Stack Decisions

While building a cross-platform desktop app, the practical options are limited—most tools over the past few years have been built on Electron or natively. However, as the Rust ecosystem has rapidly matured, it unlocked a better alternative, to choose a Rust-based framework (Tauri) because it delivers significantly smaller binaries (~8–10 MB vs 200 MB+), lower memory usage (~170 MB vs 400 MB+), and a reduced attack surface by avoiding bundled Chromium and Node.js. For a performance-critical, security-sensitive Kubernetes IDE.

Intelligence Layer As AI agent frameworks rapidly emerged - LangGraph, OpenAI Agents SDK, Anthropic SDK, CrewAI, Letta AI, Google ADK, Microsoft Agent-Lightning and others - each promised to be “the only framework you need.” I experimented with multiple options, switching frameworks several times, but in practice none were production-ready for Agentkube’s needs: some were strong at orchestration but weak at control, others good at tooling but lacking safety or flexibility. These frameworks were evolving monthly, and relying on their release cycles introduced risk and coupling. After studying how mature tools like OpenCode, Aider, and Codex were built, I chose a minimal, framework-less approach using the core OpenAI SDK, designing a custom agent, tool, and safety layer around it. This gave full control, predictable behavior, and the flexibility required for a security-critical, human-in-the-loop system like Agentkube.

Architecture Decisions

While building Agentkube as a desktop application, there were two viable approaches: build the entire system in Rust or decouple the application into independent components. Although a full Rust stack was attractive for performance and safety, the AI ecosystem at the time was heavily centered around Python and TypeScript, with minimal Rust support. To avoid constraining the intelligence layer and to keep the system adaptable, Agentkube was designed with a decoupled architecture.

This separation also aligned with long-term goals. By keeping the backend independent of the desktop UI, Agentkube could evolve beyond a local IDE and later run in-cluster or as a standalone service, similar to how tools like Headlamp structure their architecture. Given how fast AI frameworks were evolving—shipping breaking changes every few weeks—tracking and re-integrating them continuously would have been unsustainable. Instead, building a minimal, custom intelligence layer reduced churn and avoided biweekly refactors driven by upstream framework changes.

This design also future-proofs Agentkube beyond a local desktop IDE. By keeping backends loosely coupled, the same services can later run outside the desktop app or inside a cluster, enabling multiple deployment models without architectural rewrites. Instead of tightly binding the system to fast-moving AI frameworks, Agentkube adopts a minimal, custom-built intelligence layer, allowing new capabilities to be added without destabilizing the core product.

Challenges & Trade-offs

One of the biggest challenges was navigating a fast-moving AI ecosystem while building a production-grade system. When Agentkube was being designed, most agent frameworks (LangChain, LangGraph, OpenAI Agents SDK, etc.) were evolving monthly, often introducing breaking changes or missing critical pieces. Some frameworks were good at orchestration but weak at control, others supported tooling but lacked human-approval semantics or Model Context Protocol (MCP) compatibility. Relying on these abstractions would have tightly coupled Agentkube’s core logic to external release cycles, forcing frequent refactors. This led to the trade-off of avoiding heavy frameworks and instead building a custom, minimal intelligence layer on top of core SDKs.

Another key trade-off was language and architecture choice. A pure Rust implementation would have been ideal, but the lack of Rust support across AI tooling made that path impractical. Decoupling the system-Rust for the desktop UI, Go for the proxy server, and Python for AI services-introduced complexity but kept the system flexible and future-proof. Even though Python startup time was noticeably slow, this approach allowed Agentkube to integrate with the broader AI ecosystem and remain deployable beyond the desktop in the future, including potential in-cluster or standalone deployments.

What didn’t work as expected was assuming existing frameworks would handle human-in-the-loop execution, safe tool calls, and custom generative UI flows out of the box. These turned out to be first-order problems that required explicit design, not configuration. The biggest learning was that in real infrastructure systems, control, predictability, and explicit boundaries matter far more than agent autonomy or clever abstractions-and that building less, but deliberately, was the only sustainable way forward.

Download Agentkube: agentkube.com